What Is AI Bias? Causes, Types, & Real-World Impacts

AI bias is a systematic tendency of an artificial intelligence system to produce outputs that unfairly favor or disadvantage certain groups or outcomes.

It arises when training data, model design, or human oversight introduce patterns that distort results. These distortions can affect decision-making in areas such as healthcare, finance, hiring, and cybersecurity, reducing reliability and trust in AI systems.

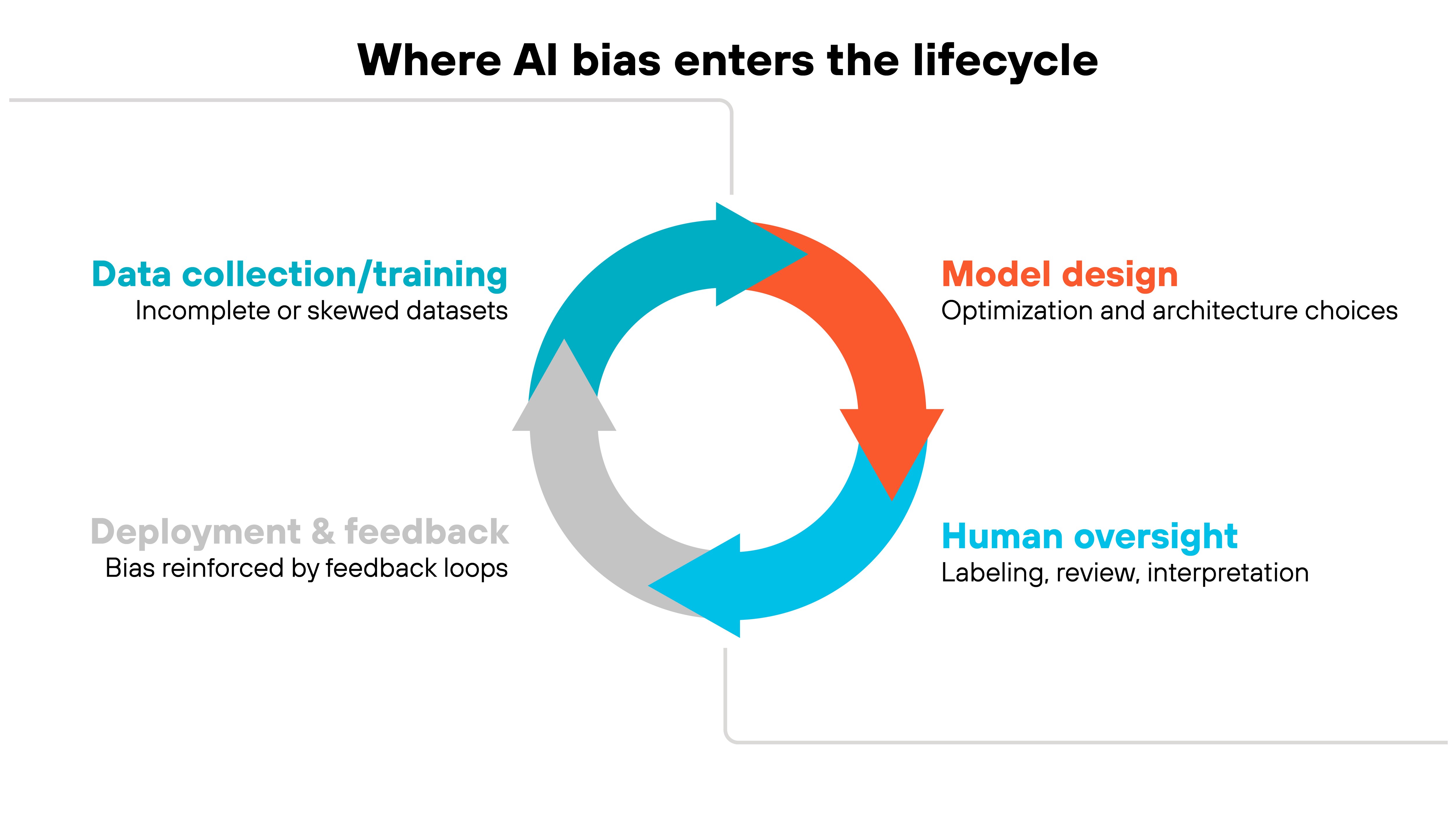

Why does AI bias happen?

Bias in AI doesn't appear by chance. It usually traces back to specific points in how systems are built, trained, and used. Tracing those points helps show not only where bias enters the pipeline but also why it persists.

-

One of the most common causes is incomplete or skewed training data.

If the data doesn't represent the real-world population or task, the system carries those gaps forward. Historical records that already contain discrimination can also transfer those patterns directly into outputs.

-

Model design adds another layer.

When optimization goals prioritize efficiency or accuracy without fairness, results can tilt toward majority groups. Small design decisions — such as which features are emphasized or how error rates are balanced — can make those imbalances worse.

-

Bias also develops once systems are deployed.

Predictions influence actions, those actions generate new data, and that data reinforces the model's assumptions. Over time, this feedback loop can lock bias into place, even when the original design was more balanced.

-

Finally, human judgment plays a role throughout the lifecycle.

People label training data, adjust parameters, and interpret outputs. Their own cultural assumptions inevitably shape those steps. Which means bias is not just technical but socio-technical — it's introduced by both machines and the people guiding them.

Together, these factors explain why bias in AI is persistent. Data, design, deployment, and human oversight interact in ways that make it difficult to isolate a single cause. Addressing bias requires attention across the full lifecycle, not just one stage in isolation.

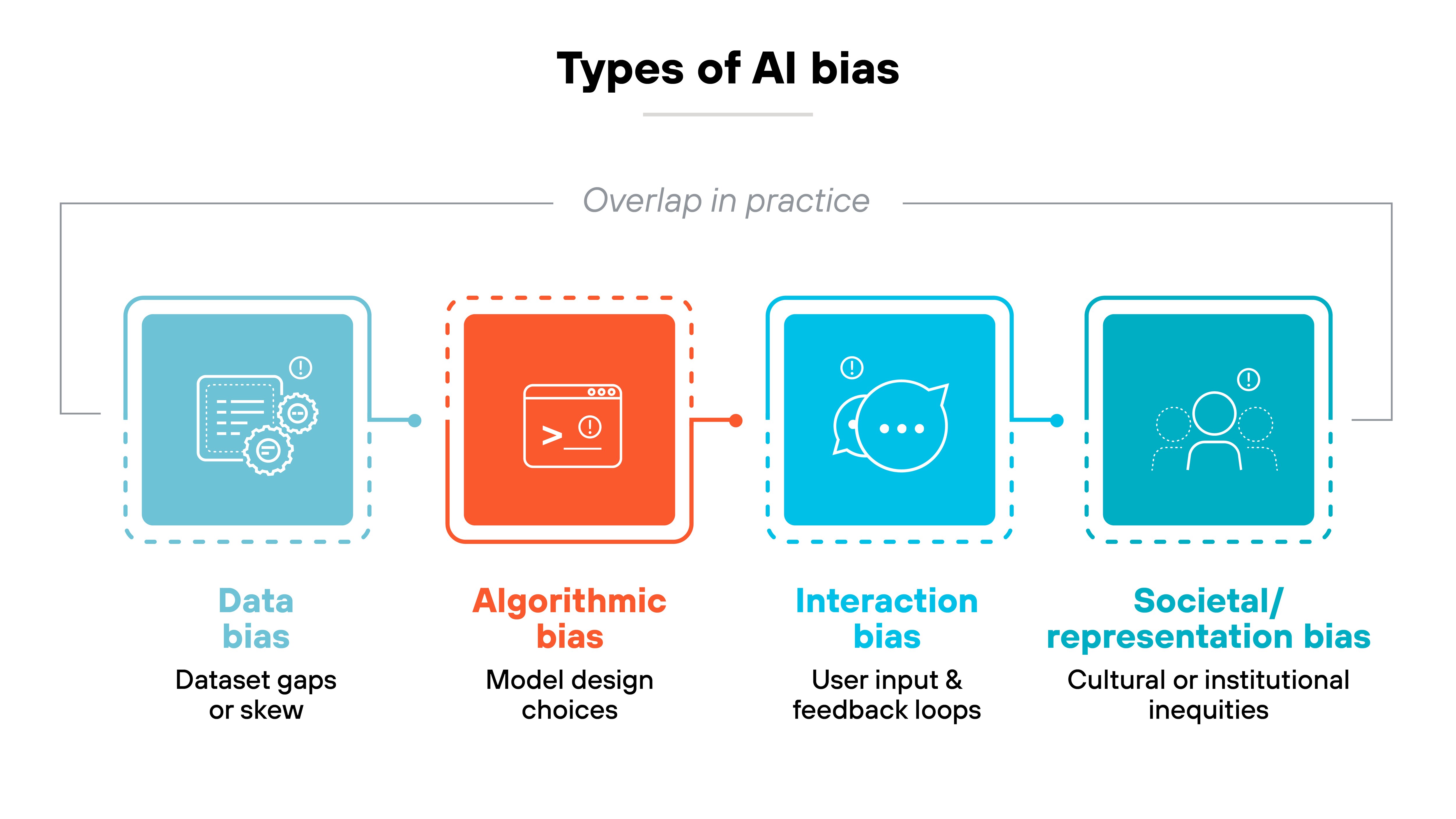

What types of AI bias exist?

Now that we've covered how bias gets introduced into AI systems, let's walk through how it appears in practice.

Bias doesn't appear in a single form. It takes shape in different ways depending on where it enters the system.

Which is why understanding the main types helps separate where bias comes from versus how it shows up in outputs. And that distinction makes it easier to evaluate and address.

Data bias

Data bias appears when outputs reflect distortions in the underlying dataset.

It shows up as uneven performance across groups or tasks when training data fails to capture the full real-world population, relies on limited coverage, or embeds historical skew. Proxy variables can also leak sensitive traits into results.

Algorithmic bias

Algorithmic bias surfaces when the model's internal design amplifies imbalance.

Optimization choices, hyperparameters, feature weighting, or loss functions may systematically favor certain outcomes over others. Even if data is balanced, model architecture can tilt results toward majority patterns or degrade accuracy on underrepresented cases.

Interaction bias

Interaction bias develops over time through user engagement.

Systems that adapt to input, reinforcement, or feedback loops can internalize stereotypes and repeat them in outputs. This bias evolves dynamically during deployment, often in ways that designers didn't anticipate during training.

Societal and representation bias

Societal bias becomes visible when AI mirrors existing cultural or institutional inequities.

Outputs may underrepresent some groups, reinforce demographic associations, or carry geographic and linguistic skew. Because these patterns stem from broader social data, they often persist even after technical adjustments.

Overlap in practice

Types of bias rarely appear in isolation.

A system may combine data, algorithmic, interaction, and societal bias at once, producing complex effects that are hard to disentangle. Recognizing this overlap is essential for assessing fairness and deciding where interventions will have the most impact.

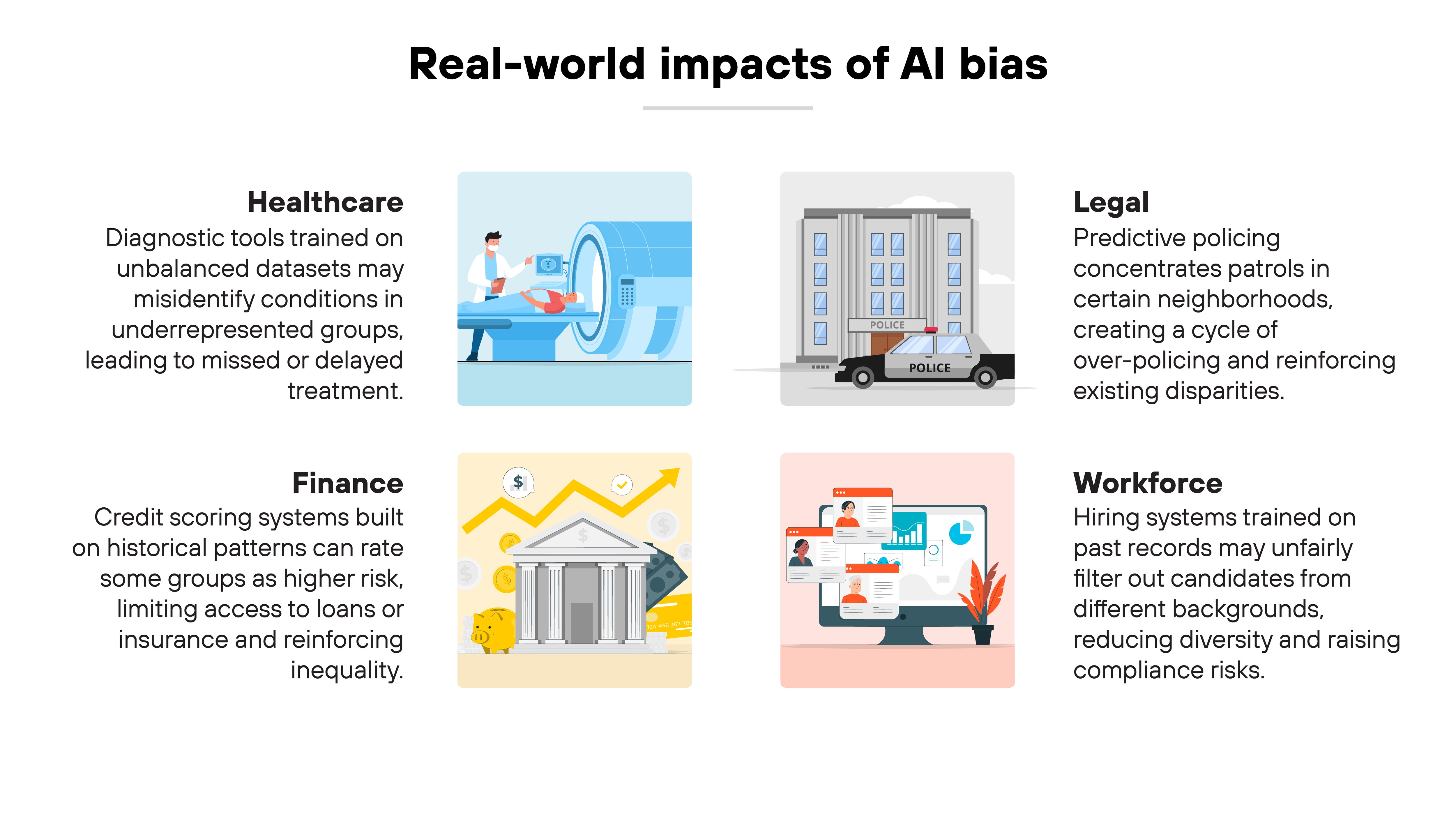

What are the real-world impacts of AI bias?

The effects of AI bias show up most clearly when models are used in high-stakes environments. These aren't abstract errors. They shape outcomes that directly affect people's health, livelihoods, and opportunities.

-

Healthcare is one of the clearest examples.

When diagnostic tools are trained on unbalanced datasets, they can misidentify conditions in underrepresented groups. That leads to missed or delayed treatment. The result isn't just technical inaccuracy. It's patient safety.

-

Finance shows another dimension.

Credit scoring models that rely on historical patterns may rate certain groups as higher risk, even when qualifications are the same. That limits access to loans or insurance. Over time, the bias compounds — restricting economic participation and reinforcing inequality.

-

Legal systems face similar risks.

Predictive policing often concentrates attention on neighborhoods already under scrutiny, creating a cycle of over-policing. Sentencing algorithms trained on past cases can mirror disparities that courts are trying to reduce. Once these tools are embedded in justice processes, bias doesn't just persist. It gets harder to challenge.

-

Workforce decisions are affected too.

Hiring systems that learn from past records often prioritize resumes that look like those of previous hires. That means applicants from different educational or demographic backgrounds may be unfairly filtered out. Organizations don't just lose diversity. They also face compliance and trust concerns when decisions aren't transparent.

The common thread across these examples is that bias undermines fairness and reliability. It creates ethical concerns, operational risks, and a loss of public trust.

Ultimately, addressing bias isn't just about improving model accuracy. It's about ensuring AI can be depended on in the places where the stakes are highest.

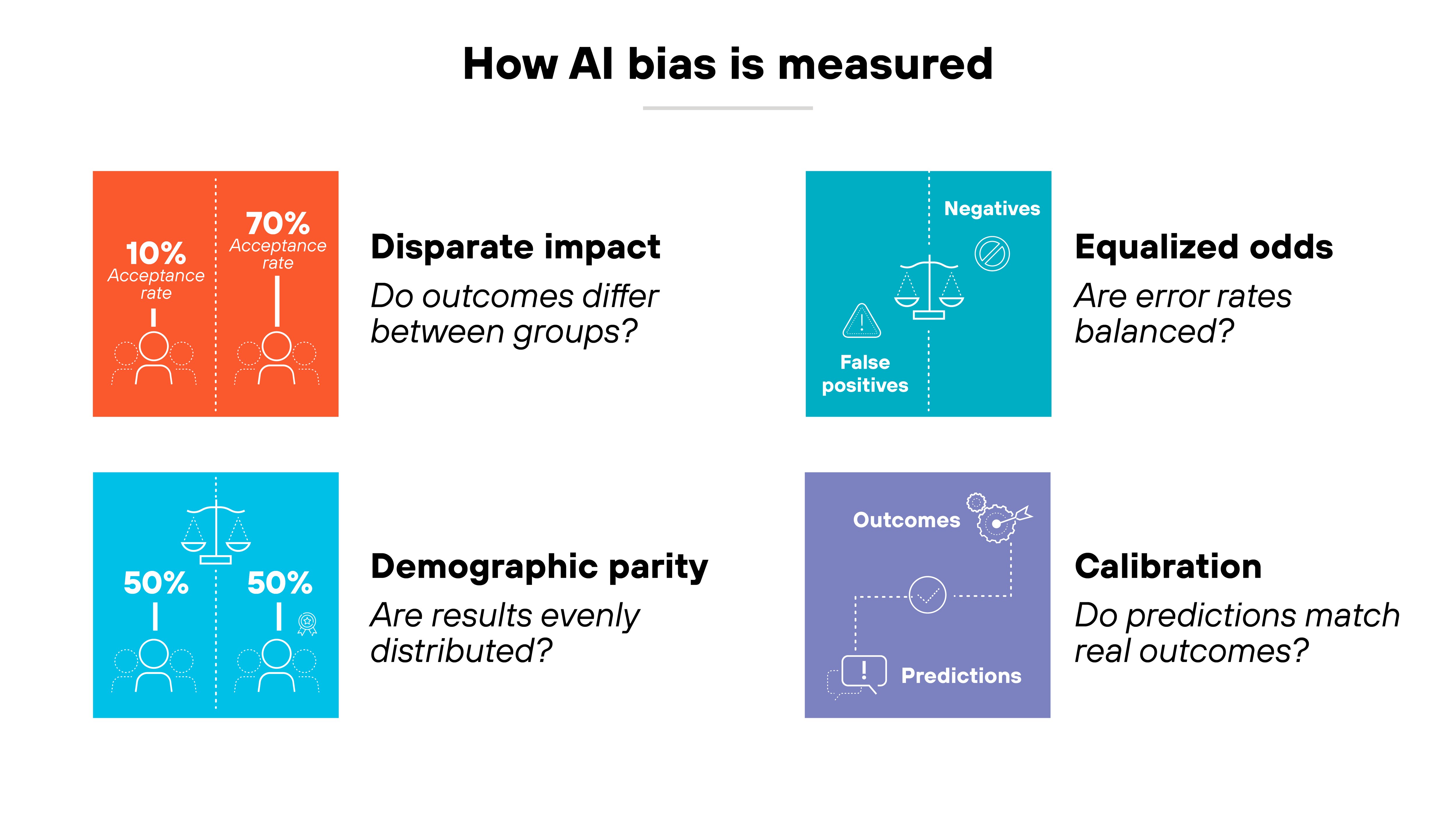

How is AI bias actually measured?

Knowing that bias exists isn't enough. The harder problem is measuring it in a way that's objective and repeatable. Without clear metrics, organizations can't tell whether a system is fair or if attempts to reduce bias are working.

Researchers often start with disparate impact.

This looks at how outcomes differ across groups. For example, if one demographic receives positive results at a much higher rate than another, that gap is a sign of bias. It's a straightforward test, but it doesn't always explain why the gap exists.

Another common approach is demographic parity.

Here the idea is that outcomes should be evenly distributed across groups, regardless of other factors. It sets a high bar for fairness, but critics point out it can conflict with accuracy in some contexts.

Metrics like equalized odds and calibration go further.

Equalized odds checks whether error rates — false positives and false negatives — are balanced between groups. Calibration tests whether predicted probabilities match real-world outcomes consistently. These measures help reveal subtler forms of skew that accuracy scores alone can't capture.

The point is that measurement bridges theory and practice. It gives a way to quantify bias and compare systems over time.

Organizations don't have to rely on intuition. They can use evidence to guide decisions about whether an AI model is fair enough to be deployed responsibly.

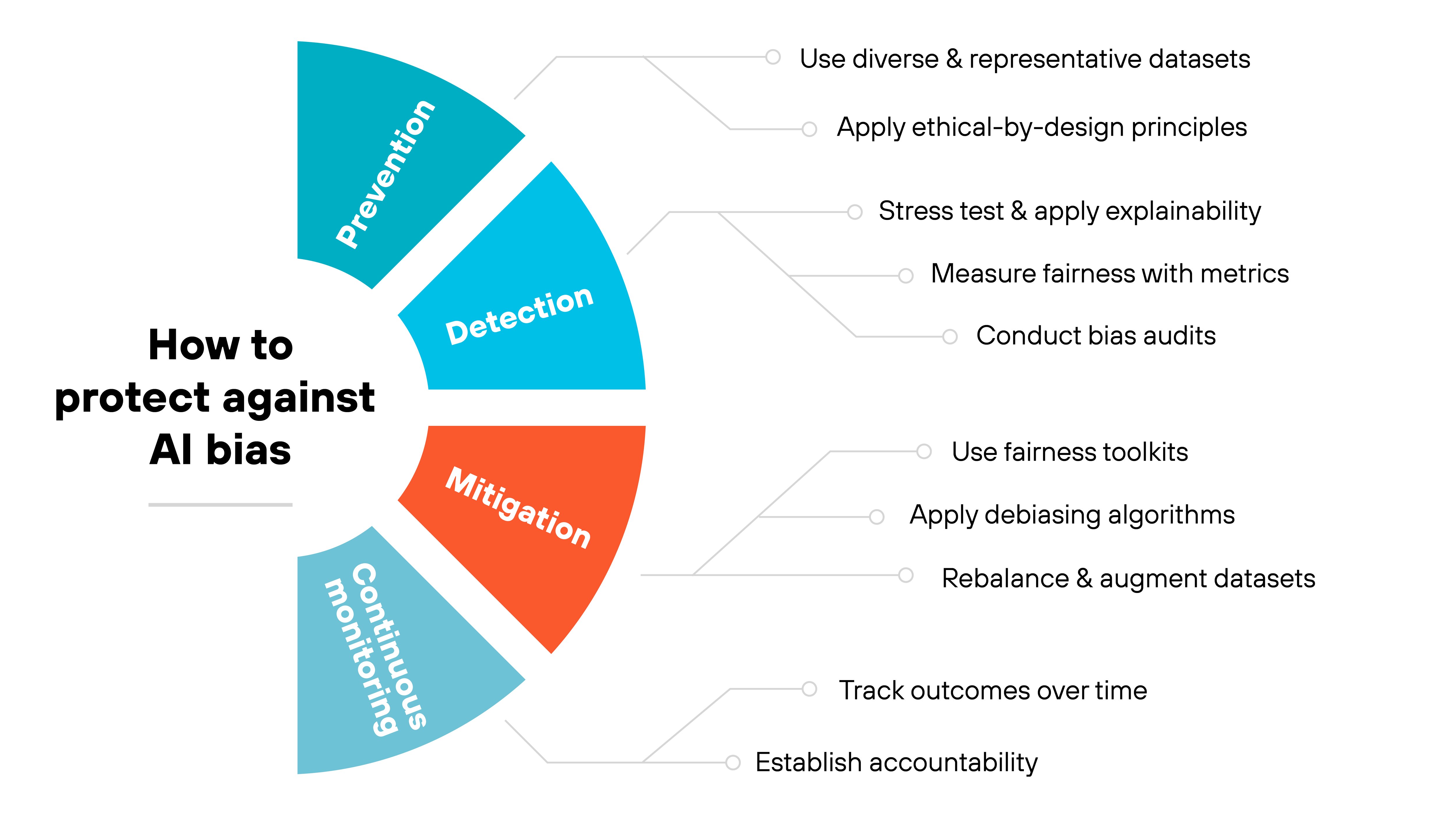

How to protect against AI bias

AI bias can't be eliminated entirely. But it can be prevented, detected, and mitigated so its impact is reduced. The most effective approach is layered and applied across the lifecycle of a system.

Let's break it down.

Prevention

Bias is easiest to address before it enters the system. Prevention starts with the data.

-

Use diverse and representative datasets

- Collect data that reflects different populations, geographies, and contexts.

- Audit datasets to identify missing groups or overrepresentation.

- Revisit datasets periodically to account for changes in real-world conditions.

-

Apply ethical-by-design principles

- Build fairness goals into model requirements alongside accuracy.

- Document data sources and labeling decisions to make them transparent.

- Establish governance reviews before training begins.

Detection

Even with strong prevention, bias often appears during testing or deployment. That's why detection is its own stage.

-

Conduct bias audits

- Test performance across demographic groups rather than just overall accuracy.

- Use domain-specific benchmarks where available.

-

Measure fairness with metrics

- Apply measures such as disparate impact, equalized odds, or demographic parity.

- Use multiple metrics together since each reveals different kinds of skew.

-

Stress test and apply explainability

- Run edge-case scenarios to uncover blind spots.

- Use explainable AI (XAI) tools to see which features influence outputs.

Mitigation

When bias is identified, mitigation strategies help reduce it. These techniques are applied after a model is trained or even after it's deployed.

-

Rebalance and augment datasets

- Add more examples from underrepresented groups.

- Apply data augmentation techniques to strengthen coverage where real-world data is scarce.

-

Apply debiasing algorithms

- Adjust model weights or outputs to reduce skew.

- Test carefully to ensure fairness improvements don't compromise reliability.

-

Use fairness toolkits

- Use open-source tools like AI Fairness 360, a Linux Foundation AI incubation project, or Google What-If.

- Standardize mitigation practices across teams to make them repeatable.

Continuous monitoring

Bias management doesn't end with deployment. Models adapt to new data and environments, which means bias can reemerge.

-

Track outcomes over time

- Monitor error rates and fairness metrics continuously.

- Compare current outputs with baseline measurements from earlier testing.

-

Establish accountability

- Assign ownership for bias monitoring across data science and compliance teams.

- Document findings and share them with stakeholders.

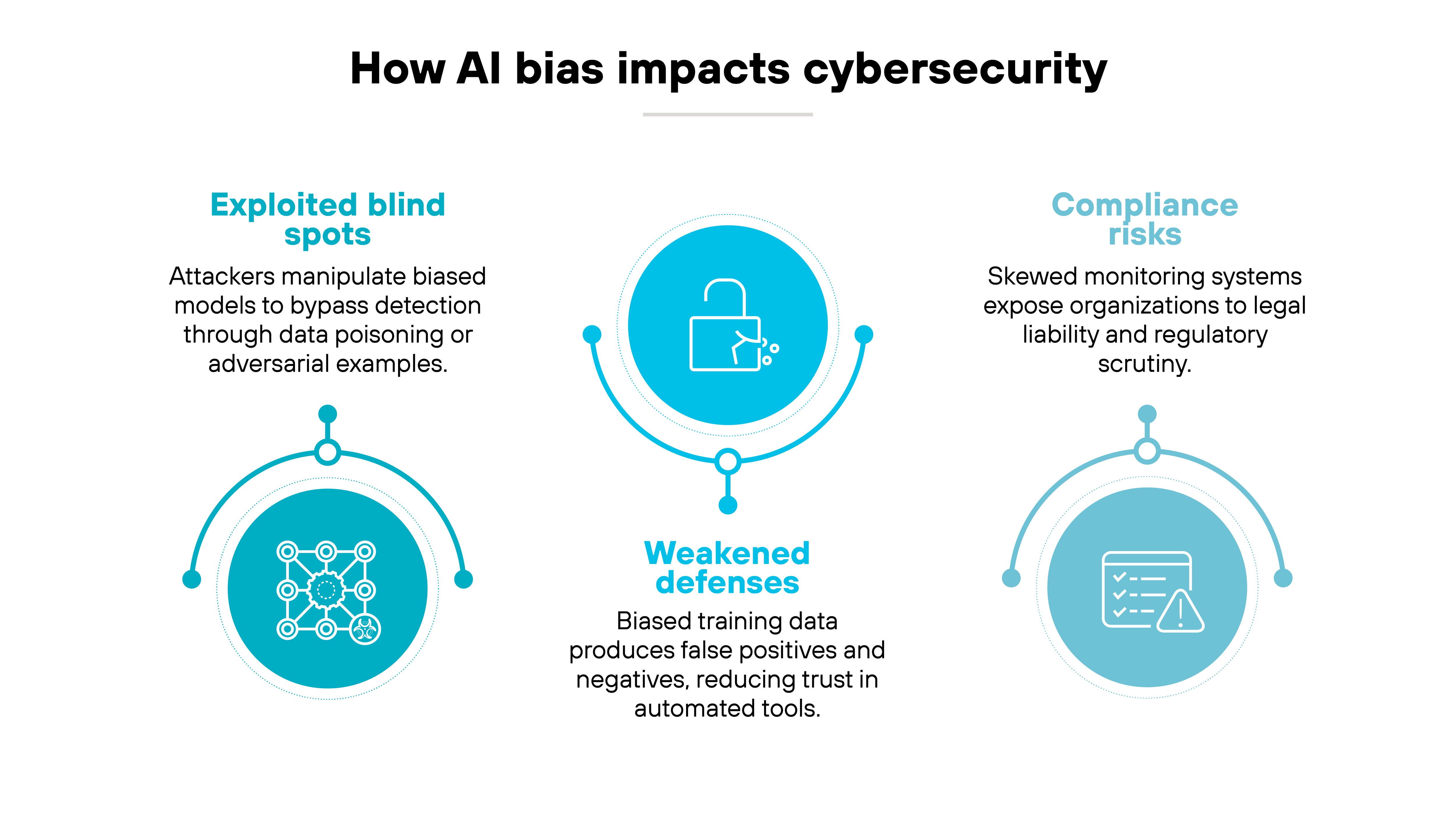

Why does AI bias matter for cybersecurity?

In AI-driven cybersecurity, AI bias isn't only an ethical concern. It can become a direct vulnerability.

Here's why.

Attackers look for weaknesses in how models are trained and used. So if an intrusion detection system is biased toward certain traffic patterns, for example, an adversary can exploit the blind spot through data poisoning or adversarial examples.

Which means: bias doesn't just skew outcomes — it can be weaponized.

Bias also shows up inside the tools defenders rely on. Skewed training data can produce false positives that overwhelm analysts or false negatives that miss real threats. Over time, both outcomes reduce trust in automated defenses and make it harder for teams to respond effectively.

There's another dimension. Many organizations now use AI for insider threat monitoring or employee risk scoring. If those systems reflect bias, they can expose companies to compliance violations and legal liability. Uneven outcomes across demographic groups can trigger regulatory scrutiny or lawsuits, for instance.

Put simply: AI bias compromises the trustworthiness of security systems themselves — which makes bias not only an ethical concern but a direct AI security issue enterprises need to manage head-on.

- What Is Adversarial AI?

- Top GenAI Security Challenges: Risks, Issues, & Solutions

- What Is a Data Poisoning Attack? [Examples & Prevention]

REPORT: THE STATE OF GENERATIVE AI 2025

See how GenAI adoption, risks, and strategies are evolving across 7,000+ orgs.

Download report

AI bias FAQs

References

Kalai, A. T., & Vempala, S. (2024). Calibrated language models must hallucinate. arXiv preprint arXiv:2311.14648. https://doi.org/10.48550/arXiv.2311.14648

Xu, Z., Jain, S., & Kankanhalli, M. (2024). Hallucination is inevitable: An innate limitation of large language models. arXiv preprint arXiv:2401.11817. https://doi.org/10.48550/arXiv.2401.11817

Chelli, M., Descamps, J., Lavoue, V., Trojani, C., Azar, M., Deckert, M., Raynier, J. L., Clowez, G., Boileau, P., & Ruetsch-Chelli, C. (2024). Hallucination rates and reference accuracy of ChatGPT and Bard for systematic reviews: Comparative analysis. Journal of Medical Internet Research, 26, e53164. https://doi.org/10.2196/53164

Farquhar, S., Kossen, J., Kuhn, L., & Gal, Y. (2024). Detecting hallucinations in large language models using semantic entropy. Nature, 630, 123–131. https://doi.org/10.1038/s41586-024-07421-0

Wilson, S. (2024). The developer’s playbook for large language model security: Building secure AI applications. O’Reilly Media.

-

Li, J., Chen, J., Ren, R., Cheng, X., Zhao, W. X., Nie, J.-Y., & Wen, J.-R. (2024). The dawn after the dark: An empirical study on factuality hallucination in large language models. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (pp. 10879–10899). Association for Computational Linguistics. https://doi.org/10.18653/v1/2024.acl-long.586